Compact Descriptors for Sketch-based Image Retrieval

using a Triplet loss Convolutional Neural Network

published in Computer Vision and Image Understanding (CVIU), June 2017. DOI: 10.1016/j.cviu.2017.06.007

University

of

Surrey, UK.

University of Sao Paulo, Brazil.

Abstract

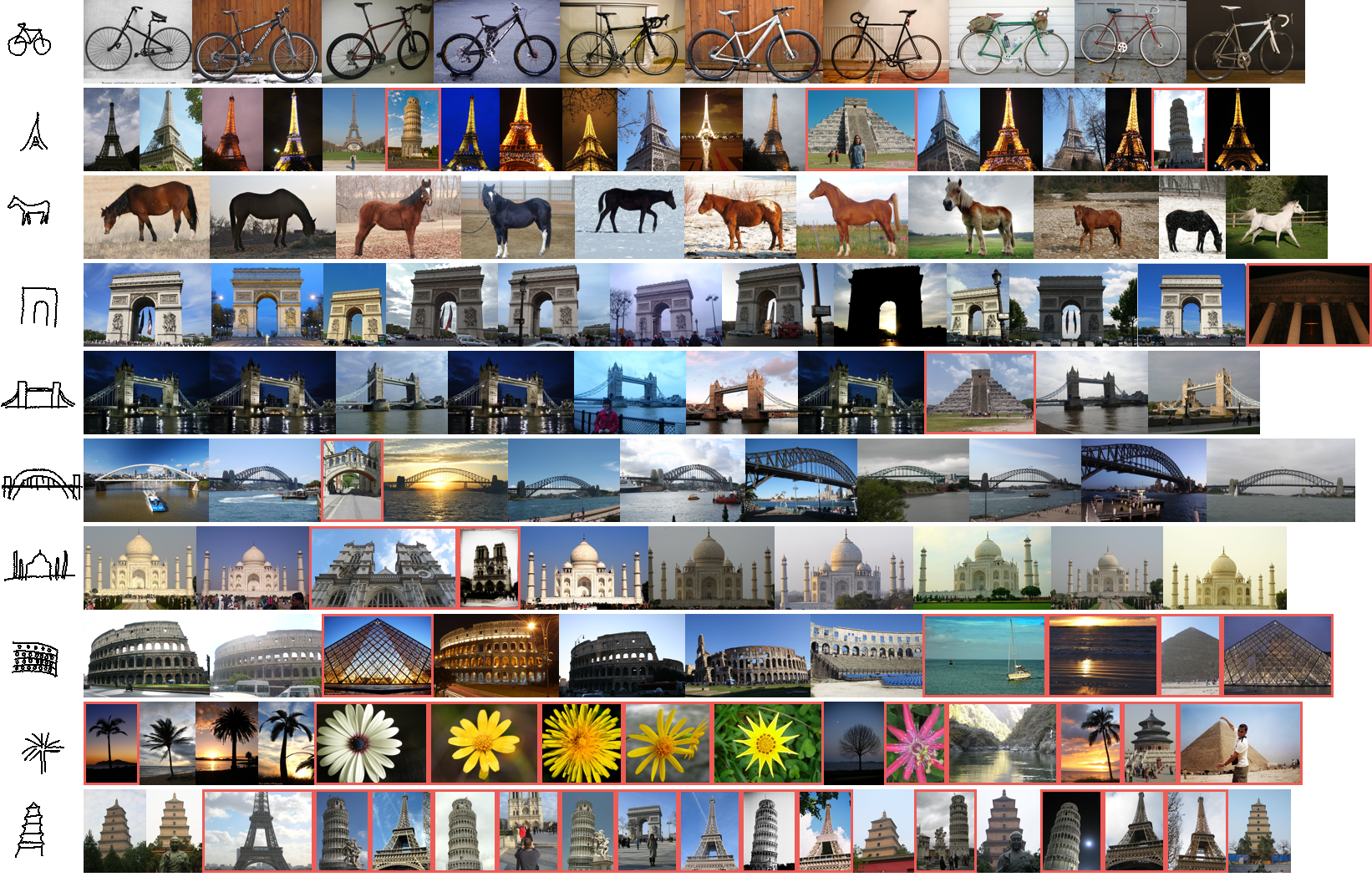

We present an efficient representation for sketch based image retrieval (SBIR) derived from a triplet loss convolutional neural network (CNN). We treat SBIR as a cross-domain modelling problem, in which a depiction invariant embedding of sketch and photo data is learned by regression over a siamese CNN architecture with half-shared weights and modified triplet loss function. Uniquely, we demonstrate the ability of our learned image descriptor to generalise beyond the categories of object present in our training data, forming a basis for general cross-category SBIR. We explore appropriate strategies for training, and for deriving a compact image descriptor from the learned representation suitable for indexing data on resource constrained e. g. mobile devices. We show the learned descriptors to outperform state of the art SBIR on the defacto standard Flickr15k dataset using a significantly more compact (56 bits per image, i. e. ≈ 105KB total) search index than previous methods.

Paper

Compact Descriptors for Sketch-based Image Retrieval using a Triplet loss Convolutional Neural Network (pdf).

Supplementary Materials

1. Dataset

TU-Berlin Sketch (training): 20,000 sketches of 250 categories obtained from Amazon Mechanical Turk (Source).

Flickr25K (training): 25,000 images of the same 250 categories, resized to max dimension of 256 pixels, crawled from Flickr, Google and Bing (1.8G) (Download).

Flickr15K (test): ≈15,000 images and 330 sketches of 33 categories (Source, Mirror). Alternatively, a pre-processed package containing image edgemaps and skeletonised sketches both in lmdb format can be downloaded here (1GB).

2. Pretrained model

Caffe pretrained model with deploy prototxt for image and sketch (50MB)(Download).

3. Code

Python code for feature extraction (Github).

4. BibTeX

@article{bui2017compact,

title = {Compact descriptors for sketch-based image retrieval using a triplet loss convolutional neural network},

author = {Tu Bui and Leonardo Ribeiro and Moacir Ponti and John Collomosse},

journal = {Computer Vision and Image Understanding},

year = {2017},

volume={164},

pages={27--37},

issn = {1077-3142},

doi = {http://dx.doi.org/10.1016/j.cviu.2017.06.007},

url = {http://www.sciencedirect.com/science/article/pii/S1077314217301194},

publisher={Elsevier}

}