3D Virtual Human Shadow (3DVHshadow)

Farshad Einabadi, Jean-Yves Guillemaut and Adrian Hilton

Centre for Vision, Speech and Signal Processing (CVSSP), University of Surrey, Guildford, England

This page contains download links to the synthetic datasets used in the paper "Learning Projective Shadow Textures for Neural Rendering of Human Cast Shadows from Silhouettes". Before you download the datasets, you must first accept the licence conditions below.

The datasets are freely available under the following terms and conditions:

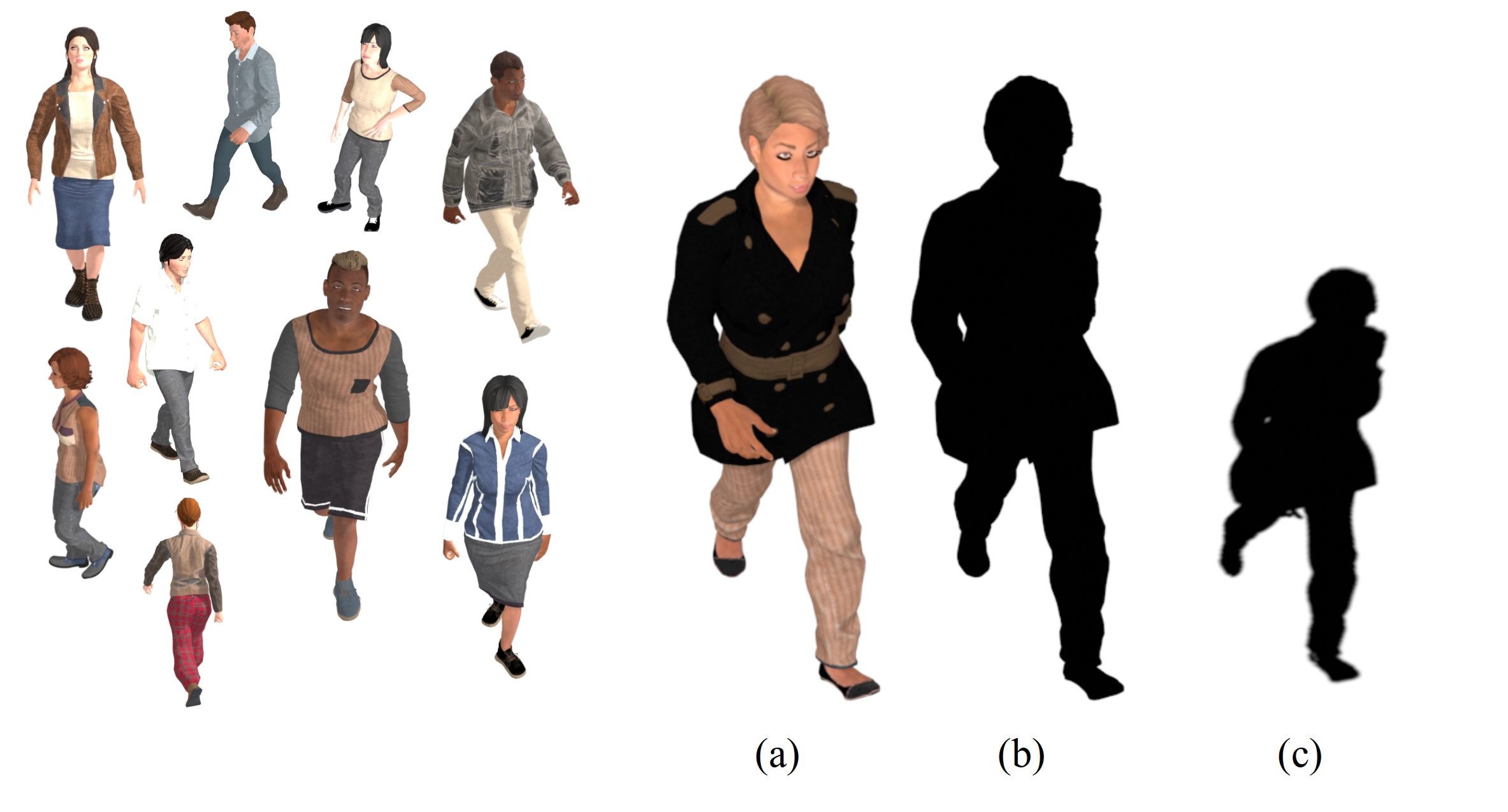

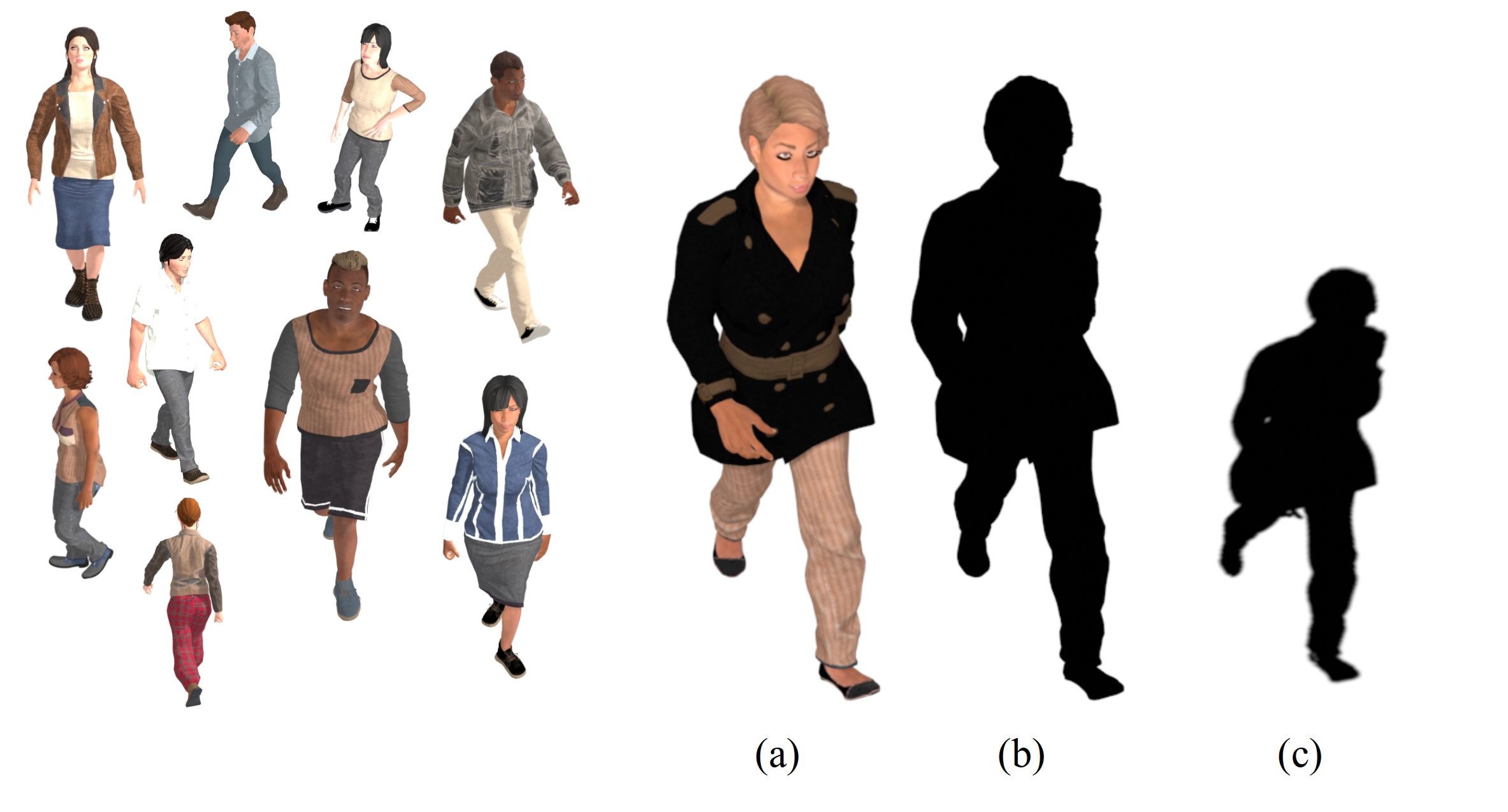

3DVHshadow contains images of diverse synthetic humans generated to evaluate the performance of cast hard shadow algorithms for humans. Each dataset entry includes (a) a rendering of the subject from the camera view point, (b) its binary segmentation mask, and (c) its binary cast shadow mask on a planar surface -- in total 3 images (headline figure). The respective rendering metadata such as point light source position, camera pose, camera calibration, etc. is also provided alongside the images.

To synthesise shadows of people we use the 3DVH virtual human dataset [1] which contains 418 3D parametric models of people. These models are generated based on 14 male and 11 female bodies -- with 8 to 48 modifications per body in shape and pose parameters, hair and clothing. 3DVH models are animated using the skeletal motion capture sequences from the Adobe Mixamo database; in total, 50 different walking sequences are applied randomly to the parametric models. The clothing of people in 3DVH are from Adobe Fuse. In this dataset, we split the models into two sets of sizes 311 and 107 respectively for the training and evaluation. The Eevee rendering engine of Blender 3.0 is employed to render the scene contents. Each subject is assigned with a random posture and is rendered under 80 combinations of random point light and camera poses.

Please refer to the publication above for details of the dataset generation.

[1] A. Caliskan, A. Mustafa, E. Imre, A. Hilton: Multi-view Consistency Loss for Improved Single-Image 3D Reconstruction of Clothed People. ACCV (1) 2020: 71-88.